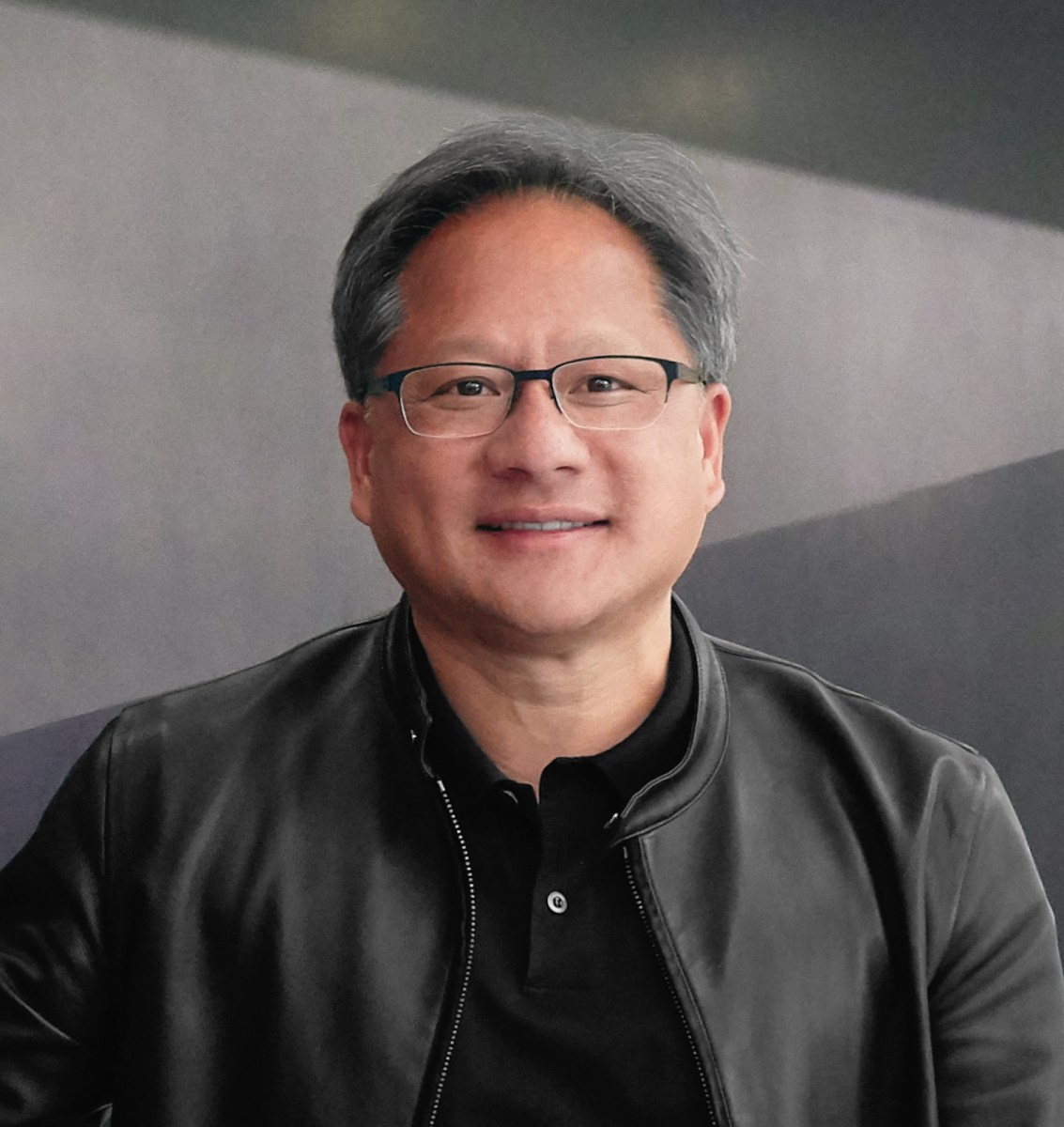

Jensen Huang wants To bring generative AI to every data center, the nvidia said the co-founder and CEO during Computex in Taipei today. During the speech, Hwang’s first public speech in nearly four years, he said he made a slew of announcements, including chip release dates, the DGX GH200 supercomputer and partnerships with major companies. Here’s all the news from the two-hour keynote.

1. Nvidia’s GForce RTX 4080 Ti GPU for gamers is now in full production and “mass” produced with partners in Taiwan.

2. Huang announced the Nvidia Avatar Cloud Engine (ACE) for games, a customizable AI model foundry service with pre-trained models for game developers. NPCs will be given more personality through AI-powered language interactions.

3. The Nvidia Cuda computing model now serves four million developers and more than 3,000 applications. Cuda has seen 40 million downloads, including 25 million in the last year alone.

4. Full production of the HGX H100 server GPU has begun and is being manufactured by “companies across Taiwan,” Huang said. He added that it is the first computer in the world with a transformer engine.

5. Huang referred to Nvidia’s 2019 acquisition of computer chip giant Mellanox for $6.9 billion as “one of the greatest strategic decisions” it has ever made.

6. Production of the next generation of Hopper GPUs will begin in August 2024, exactly two years after the start of manufacture of the first generation.

7. Nvidia’s GH200 Grace Hopper is now in full production. The superchip boasts 4 PetaFIOPS TE, 72 ARM CPUs connected via a chip-to-chip link, 96GB of HBM3 memory and 576 GPUs. Huang described it as the world’s first accelerated computing processor that also has giant memory: “This is a computer, not a chip.” It is designed for highly flexible data center applications.

8. If the Grace Hopper’s memory isn’t enough, Nvidia has the solution – the DGX GH200. It was created by first connecting eight Grace Hoppers with three NVLINK adapters, then connecting the two 900GB pods together. Then finally, 32 were strung together, with another layer of keys, to connect a total of 256 Grace Hopper chips. The resulting ExaFLOPS Transformer has 144TB of GPU memory and acts as a giant GPU. Huang said the Grace Hopper is so fast that it can run the 5G stack in the software. Google Cloud, Meta and Microsoft will be the first companies to have access to the DGX GH200 and will conduct research on its capabilities.

9. Nvidia and SoftBank partnered to deliver the Grace Hopper super chip to SoftBank’s new distributed data centers in Japan. They will be able to host generative AI and wireless applications in a shared multi-tenant server system, reducing costs and energy.

10. The SoftBank-Nvidia partnership will be based on the Nvidia MGX reference architecture, which is currently used in partnership with companies in Taiwan. It gives system manufacturers a standard reference architecture to help them build more than 100 server variations for AI, accelerated computing, and ubiquitous utilities. Companies participating in the partnership include ASRock Rack, Asus, Gigabyte, Pegatron, QCT, and Supermicro.

11. Huang announces the Spectrum-X accelerated networking platform for overclocking Ethernet based clouds. Includes a Spectrum 4 switch, which has 128 ports of 400Gbps and 51.2Tbps. The switch is designed to enable a new type of Ethernet, Huang said, and is designed end-to-end to do adaptive routing, performance isolation and do computing within the fabric. It also includes a Bluefield 3 Smart Nic, which connects to a Spectrum 4 switch to perform crowd control.

12. WPP, the world’s largest advertising agency, has partnered with Nvidia to develop a content engine based on the Nvidia Omniverse. It will be able to produce photo and video content for use in advertising.

13. The Nvidia Isaac ARM robot platform is now available to anyone who wants to build robots, and it’s all-in-one, from chips to sensors. Huang said Isaac ARM is starting with a chip called Nova Orin which is the first complete robotics reference assembly.

Thanks to its importance in AI computing, Nvidia shares have soared over the past year, and its market capitalization is currently around $960 billion, making it one of the most valuable companies in the world (only Apple, Microsoft, Saudi Aramco, Alphabet, and Amazon rank higher).

Business in China in limbo

There is no doubt that AI companies in China are keeping a close eye on the latest silicon technology that Nvidia is bringing to the table. Meanwhile, they may fear another round of US chip bans that threatens to undermine their progress in generative AI, which requires far more power and data than previous generations of AI.

Last year, the US government banned Nvidia from selling A100 and H100 GPUs to China. Both chips are used to train large language models such as OpenAI’s GPT-4. The H100, its latest generation chipset based on Nvidia Hopper GPU computing architecture with integrated Transformer Engine, is seeing particularly strong demand. compared to the A100H100 is capable of delivering 9x faster AI training and up to 30x faster AI inference on LLMs.

China is clearly too big a market to miss. The chip export ban would cost Nvidia an estimated $400 million in potential sales in the third quarter of last year alone. So Nvidia resorted to selling China a slower chip that met US export control rules. But in the long term, China will likely look for more powerful alternatives, and the ban serves as a poignant reminder for China to achieve self-reliance in key technology sectors.

As Huang said recently in interview With the Financial Times: “If [China] They can’t buy from … the US, they’ll build it themselves. So the United States must be careful. China is a very important market for the tech industry.”

“Web maven. Infuriatingly humble beer geek. Bacon fanatic. Typical creator. Music expert.”

More Stories

Dow Jones Futures: Microsoft, MetaEngs Outperform; Robinhood Dives, Cryptocurrency Plays Slip

Strategist explains why investors should buy Mag 7 ‘now’

Everyone gave Reddit an upvote