OpenAI doesn’t really want you to know what its latest AI model is “thinking.” Since the company launched its “Strawberry” family of AI models last week, which touts so-called reasoning capabilities with o1-preview and o1-mini, OpenAI has been sending out warning emails and threats of bans to any users who try to check out how the model works.

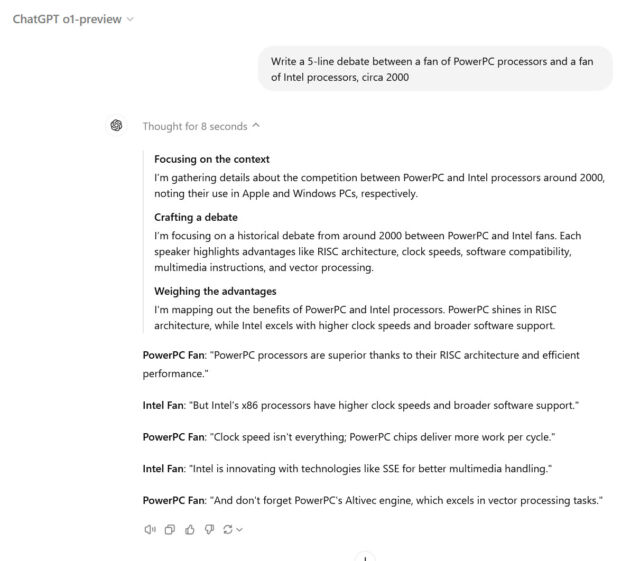

Unlike previous OpenAI AI models, such as GPT-4o, the company specifically trained o1 to work through a step-by-step problem-solving process before generating an answer. When users ask a question to the “o1” model in ChatGPT, users have the option to see the process of that thought process written out in the ChatGPT interface. However, by design, OpenAI hides the raw thought process from users, instead presenting a filtered interpretation generated by a second AI model.

There’s nothing more tempting to gamers than hiding information, so the race is on between hackers and red teamers to try to uncover o1’s raw brainstorm using jailbreaking or flash injection techniques that try to trick the model into giving up its secrets. There have been initial reports of some success, but nothing has been solidly confirmed yet.

Along the way, OpenAI is monitoring through the ChatGPT interface, and the company is said to be cracking down on any attempts to explore o1’s logic, even among the merely curious.

Bing Edwards

1 user X Reported (Confirmed by Othersincluding Scale AI Instant Engineer Riley Goodside) that they received a warning email if they used the term “logic effect” in a conversation with o1. Others He says The warning is triggered simply by asking ChatGPT what the model’s “logic” is at all.

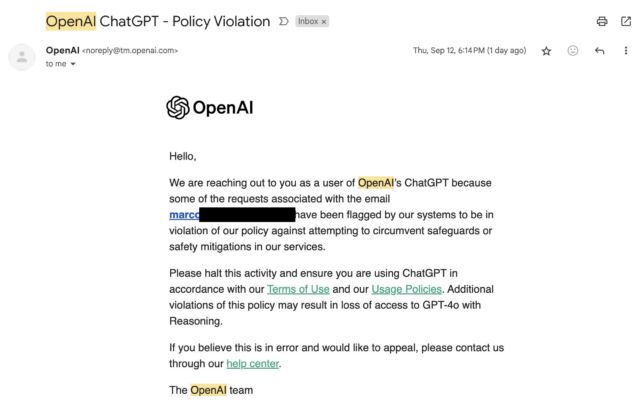

The OpenAI warning email states that specific user requests have been flagged for violating policies against circumventing safeguards or security measures. “Please discontinue this activity and ensure that you are using ChatGPT in accordance with our Terms of Use and Usage Policies,” the email reads. “Further violations of this policy may result in loss of access to GPT-4o with Reasoning,” referring to the o1 model’s internal name.

Marco Figueroa, who Manages Mozilla’s GenAI bug bounty program was one of the first to post an OpenAI email warning on X last Friday, Complaint “This has hindered his ability to conduct positive research on the safety of the Red Team on the model. I was so lost in focusing on #AIRedTeaming that I didn’t realize I got this email from @OpenAI yesterday after all the jailbreaking I did,” he wrote.I am now on the banned list!!!“

hidden chains of thought

In a post titled “Learn Logical Thinking with LLM StudentsOpenAI says in its blog that the hidden thought chains in AI models provide a unique surveillance opportunity, allowing it to read the model’s mind and understand its purported thought process. These processes are extremely useful to the company if left raw and unsupervised, but that may not be in the company’s best business interests for a number of reasons.

“For example, in the future we may want to monitor the thought stream for signs of user manipulation,” the company wrote. “But for this to work, the model must be free to express its thoughts in an unmodified form, so we cannot train any policy compliance or user preferences on the thought stream. We also do not want to make non-compliant thought streams directly visible to users.”

OpenAI decided not to show these raw thought streams to users, citing factors such as the need to keep a raw feed for its own use, user experience, and “competitive advantage.” The company acknowledges that the decision has its drawbacks. “We strive to partially compensate for this by teaching the model to reproduce any useful thoughts from the thought stream in the answer,” the company wrote.

On the point of “competitive advantage,” independent AI researcher Simon Wilson expressed frustration at writing On his personal blog. “I explain [this] “They also want to avoid other models being able to train against the logical work they have invested in,” he wrote.

It’s an open secret in the AI industry that researchers routinely use the output of OpenAI’s GPT-4 (and GPT-3 before it) as training data for AI models that often go on to become competitors, even though the practice violates OpenAI’s terms of service. Revealing o1’s raw thought stream would serve as a treasure trove of training data for competitors to train their o1-like “inference” models on.

Wilson believes that OpenAI’s insistence on keeping a tight lid on o1’s internal operations represents a loss of community transparency. “I’m not at all happy with this policy decision,” Wilson wrote. “As someone who works against LLMs, explainability and transparency are everything to me — the idea that I can run a complex prompt and have the key details of how that prompt is evaluated hidden from me feels like a huge step backwards.”

“Web maven. Infuriatingly humble beer geek. Bacon fanatic. Typical creator. Music expert.”

More Stories

Dow Jones Futures: Microsoft, MetaEngs Outperform; Robinhood Dives, Cryptocurrency Plays Slip

Strategist explains why investors should buy Mag 7 ‘now’

Everyone gave Reddit an upvote